EMS17 Abstracts

→Welcome to EMS17

Nagoya , Japan

→Keynote Lecture

→Abstracts

Welcome to EMS17 Nagoya , Japan

Date:

5-8 September 2017

Venue:

Nagoya City University, Kita-Chikusa

campus

School of Design and Architecture, Center

for Environmental Design

Conference Theme:

Communication in/through Electroacoustic Music

The conference theme considers cultural/intercultural communication in/through electroacoustic music. Communication is possible among people who share certain common bases such as language, logic, sense, perception, listening contexts, etc. What are the common bases for electroacoustic music? How are these manifested in intercultural situations? Topics concerning technical application within electroacoustic music regarding communication systems such as interaction, telematics, SNS etc. are also welcomed.

Other paper themes are welcome concerning any topics within the field of electroacoustic music studies. For example, presentations can be given on research on electroacoustic music history, aesthetics, analysis of a piece, social aspects, terminology, taxonomy, sound ecology, genres and styles, pedagogy, research trends, etc.

Keynote Lecture

|

Prof.Yuji Numano

Professor of Musicology at the Toho Gakuen School of Music, Tokyo. He holds a Ph.D. from Tokyo University of the Arts. In 2008-2009, he was visiting fellow at Harvard University. His publications include “Ligeti, Berio, Boulez: the end of avant-garde and the future of art”(2005), “The history of Japanese Contemporary Music since 1945”(2007), “Edgard Varese and his Utopian Idealism: the detail of unfinished Espace”(2009), “A guide to fundamental musical analysis”(2017). |

Rethinking “The Liberation of Sound”

"Our musical alphabet is poor and illogical. Music, which should pulsate with life, needs new means of expression, and science alone can infuse it with youthful vigor.”

Thus wrote Edgard Varèse in 1917—just 100 years ago—in the magazine “391” published by the Dadaist Francis Picabia. Varèse was the person who continued searching the possibility of new musical instruments beyond the restrictions of traditional ones, and the possibility of a new musical concept. Many of his lectures about the future of music were later gathered up under the title of “The liberation of sound” by his pupil Chou Wen-Chung. Even after nearly a century, these texts provide invaluable hints, relevant to the issues of our time. Varèse called the music "organized sound" in these lectures and evoked many times the image of the projecting “sound beams” in space. Indeed, he began to compose the work titled "Espace (space)" in the 1930s, but it was not completed, after all. Although he could realize the part of his spatial strategy in his "Poème électronique" at Brussel Expo after the Second World War, it seems that technology of the time was never enough. Today, after more than fifty years since Varèse died, how far have we advanced from the point where he was?

In this speech, while taking Varèse's lectures into account, I will reconsider "space," one of the important concepts in Electroacoustic Music. In doing so I pay attention to the fact that the boundaries of the spatial theories have expanded from physical to philosophical, psychological and sociological fields. For example, Heidegger made a stern criticism of the standard spatial concept―it was a homogeneous, without any centers, infinite, and only as a container or a framework for things to exist. Abraham A. Moles proposed "psychology of space" and tried to capture space in terms of "motion." In addition, in the field of sociology, Henri Lefebvre stated that the space had active properties and it could engage in productive process positively. He then classified the function of the space into three categories: spatial practice, representation of space, representational space. These “spatial turns” are basically geographical concepts targeting social spaces; however, I show that they are equally applicable in thinking about space in music.

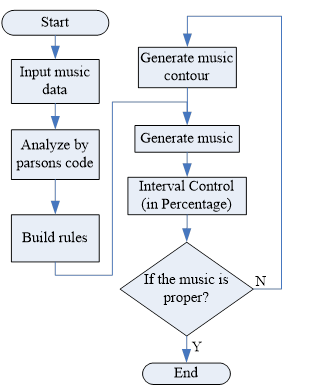

CHEN, Yi-Shin / CHEN, Kuan-Ting / LIU, Hsien-Chi Toby / SARAVIA, Elvis

HUANG, Chih-Fang / YU, Yen-Yeu

LÓPEZ RamÍrez-GastÓn, José Ignacio

SU, Yu-Huei / SOO, Von-Wun / HUANG, Chih-Fang / CHEN, Mei-Chih / LI, Hsi-Chang / CHEN, Heng-Shuen

TORO PÉREZ, Germán / BENNETT, Lucas

TSABARY, Eldad / HEWITT, Donna

AKKERMANN, Miriam

Beyond practice? Tracing cultural preferences

in mixed music performances

In the 1980's, the newly developed, faster, and smaller digital processors allowed the use of computer in real- time within musical performances in concert situations. In these early days of mixed music, the first personal computer entered the public market, and synthesizers with digital sound processing were established. Set-ups often contained combinations of computer and digital sound processors. As technology was still very expensive, many compositions were developed in co-operation with institutions that provided the access to computer and processors and, if necessary, technicians and programmers.

Over the last 30 years, computer technologies rapidly developed. Technologies used in the 1980s are now outdated, the original devices as well as the often hardware bound programming languages are no longer in use. But many compositions were established in mutual relationship with the development of then new technologies – the technologies used for the composition's performance is therefore a significant part of these works. In consequence, this causes new challenges when establishing new performances of mixed music from the 1980s in the 21st century: the original hardware and software is now outdated, but was usually not archived along with the composition. The documentation of these compositions usually include descriptions of the used technologies and the set-up, but contains very rarely the original code or papers with excerpts of code transcription. Additionally, there is no established notation for the use of the electronic operations, neither in combination with traditional music scores nor more experimental notation formats. Not as common was also an audio or video documentation of the premiere.

In my recent research project with the working title “(Historic informed) Performance Practice for Computer music”, I am examining this field along several questions, such as: How is the information or the inherent artistic decision withdrawn from the documentation, and how is it interpreted? What guidelines are considered in the discussion on how should/can/must be played these compositions nowadays? How important is it to keep the original technologies and techniques, or should all the limitations set by the outdated technology be erased? Is it then still the same piece? What happens to the artistic idea, if this may partly be inherent in the original set-up? What do these practical decisions mean for the relationship between original composition and re-performance? Is the question on authenticity of a performance important, and, if so, to what extent and to whom?

One first approach was to ask, if it is possible to find already a tradition in performing mixed music, which implicitly guides the whole process of establishing a re-performance. This, however, is not possible without considering the circumstances of the original production on the one hand, and the re-performance situation on the other. Taking in account writings on performance practice of other musical fields, it becomes apparent that also the (surrounding) cultural constraints may take a strong influence.

In this paper I want to discuss, if it is possible to pin down some hints on an existing influence of cultural issues. For this, I focus on some examples of mixed music from the 1980s composed in Europe, which was re-performed after 2005 in Europe and East Asia.

The paper bases on my research at Institut de Recherche et Coordination Acoustique/Musique IRCAM in Paris, and the Center for Research in Electroacoustic Music and Audio CREAMA at Hanyang University in 2015, where I gathered informations on mixed music compositions of the 1980s in the archives as well as following new projects, in which compositions from this time period were re-performed.

The discussion bases on the idea, that deciding on suitable recent technologies, and on how to reveal technical and artistic information concerning the composition, can strongly be influenced by our (aesthetic) expectation. This includes not only the initiated debate on the existence of a tradition within mixed music performances, but also hints at a deeper discussion on musical formation and self-confidence within contemporary music in Europe and East Asia, given the fact that many recent composers work not only on their own musical language, but being also significantly influenced by their surrounding culture.

But what would this mean in detail? Where do inherent aesthetic guidelines and/or expectations derive from? Is the process of establishing a performance led by musical or aesthetic expectations? How are these expectations influenced by inherent aesthetic guidelines, and are these driven by the surrounding culture and/or the musical (aesthetic) education? Is there a silent and invisible ruleset concerning the expectation on performances deriving from the surrounding art scene and/or music tradition?

Considering that the technology is a crucial element within the artistic process – are cultural constraints for computer music maybe not as important as they were for music in former times, as technology was exchanged between the continents from the very beginning of the developments in digital sound processing? How important is it, when David Wessel tells Gregory Taylor in an interview, that he used the brand new synthesizer Yamaha DX7194, a prototype of the Roland MPU 401, and an IBM PC on his concert tour in 1983 in Japan, which was organized in cooperation with Roland, and that he tried hard to bring this equipment also to IRCAM?[1] Was it just one moment in time of matching technologies, when Xavier Chabot (IRCAM/CARL), Roger Dannenberg (Carnegie Mellon University), and Georges Bloch (CARL) started in 1984 to developed an „instrument-computer-synthesizer system for live performances“, consisting of an IBM PC, three Roland MPU 401, and four Yamaha TX7 modules?[2] How did the use of technologies differ in between the various music contexts, and how did this influence the emerging music?

- Miriam Akkermann

Miriam Akkermann was born in Seoul/Korea. She took a classical flute degree and a MA for New Music and Technologies at Conservatorio C. Monteverdi in Bolzano, and studied product design at the Free University of Bolzano, and composition and Sonic Art at Berlin University of the Arts (GER), where she also completed her PhD in musicology in 2014 entitled “Between Improvisation and Algorithms. David Wessel, Karlheinz Essl and Georg Hajdu“. In her recent research, she focusses on the idea of historic informed performance practice of mixed music. Since 2015, she is a Member of the German Young Academy.

Her compositions, sound installations, and performances have been shown at international festivals and galleries, and she published papers on artistic topics as well as her research at international conferences. Since December 2015, she is Lecturer at the Media Science Department at Bayreuth University. www.miriam-akkermann.de

miriam.akkermann@uni-bayreuth.de

ANDO, Daichi

Efficiency of adopting Interactive Machine Learning

into Electro-Acoustic Composition

In conventional composition way for electro-acoustic music, huge size of parameter sets for synthesis electro-acoustic sounds shoud be decided by human composers. In past days, pre-set parameter sets for synthesis had been commonly used. But the pre-set is not so usefull in artistic meaning, because using pre-set parameter sets for composition has been patterned and it produces rut. Also the changing a parameter in the pre-set parameter sets is dengerous for synthesis. Thus appearance of ideas that adopting machine-learning techniques to generated huge parameter sets for electro-acoustic composition learning from existing sounds is natural. The machine learning technique generating huge parameter sets from existing sounds automatically.

On the other hand, almost machine-learning techniques require “existing teaching data, expected good and bad” (in general, correct and incorrect data) or “explicitly written functions to decide good and bad work” (in general, top-down defined evaluating functions). It is a restriction of machine learning mechanism. The restriction of creativity when machine learning is applied to art-creation is unavoidable. Musics generated as results of machine learning with correct and incorrect data, top-down defined evaluating functions are not considered a new creativity. Bacause it’s strictly based on only existing work or sensibility, that has become be already old.

Therefore, as a new method, the author adopting “Interactive Machine Learning” as a music creation. The interactive machine learning means that the computer describes human composer’s sensibility as methods explicitly with dialogue process between human and computer. If human composers keep having new sensitibilities for their music, if it not discribed explicitly in human composer’s brain, computer explicitly states it. Thinking aesthetically, this is that it seems to be as a relationship between artificial inteligence and human composers.

In this paper, the author indicates summary of these actual used techniques, then discuses aesthetics meaning of the adopting these techniques and the aims of the system developing named CACIE(Computer-Aided Composition by means of Interactive Evoluation).

Actually the author mainly adopting Interactive Genetic Programming a kind of Evolutionary Computation into composition of all time-series medias such like electro-acoustic and noted works, also currently adopting human performer support system for real-time improvisation. For interactive creation, many techniques have been developed and tried in actual composition in this system. The main ideas of the CACIE, tree representation of S-Expresssion programming is suitable to describe music generally, to place electro-acoustic sounds or musical notes, to describe envelopes of synthesis parameter sets, and all parameters in time series in a work. Typical representation examples are researches and works of David Cope, Common-Lisp Music and Open-Music system.

An electro-acoustic work named “Vedana” by Masahiko Inada accepted in ICMC 2008 concert had been composed with the CACIE. The author had interviewed the composer about the feeling of use of the system and his creativitiy with the system in his composition for this work. In summary of his answer, composition with the interactive machine learning is not only that it is very similar to his conventional electro-acoustic composition thinking way but also that the his conventional method had been expanded strongly with new ideas by computer-aid, also that his creativity is not restricted with the system.

In aesthetics meaning, as mentioned before, the property of restricting creativity of the ordinary machine learning, generates new works with inputted existing works or top-down define evaluation function, is not suitable for the art creation. However, as a result, the my proposed method, composition with the interactive machine learning, expands the human’s creativity, especially electro-acoustic musical works.

- Daichi Ando

Ph.D in Science, Born in 1978 in Japan. He studied composition and computer music under Takayuki Rai and Cort Rippe at the Sonology Department, Kunitachi College of Music, Japan. Then he studied computer music with Palle Dahlstedt and Mats Nordahl at the Art & Technology, International Master Program from IT-University of Göteborg, Chalmers University of Technology, Göteborg, Sweden. In addition, he received a Ph.D. in science from Graduate School of Frontier Sciences, The University of Tokyo, Japan for studies in the application of numerical optimization methods to art creation. Currently, he teaches and conduct researches as Assistant Professor in Division of Industrial Art, Tokyo Metropolitan University.

ASKA, Alyssa

Evaluating the need for unified notation: conceptual and creative consequences of communicating electroacoustic music

Electroacoustic music has been one of the most dislocating forces upon traditional western notation, challenging our concepts regarding what music is, as well as our ideas regarding how to express composed music in a manner so that others can reproduce it later. This paper, then, explores the concerns surrounding musical documentation of electroacoustic works, and some of the challenges faced in documenting these works that do not conform to conventional systems of documentation. It also discusses the influence that documentation systems have on the music that is created, and some of the ways in which the means of musical expression influence composition.

If we begin our discussion around the early medieval period, we can see rudimentary forms of notation emerging, beginning with neumes that depicted musical trajectory and gesture. Guido’s later developments in notation allowed for the pitch element to be notated such that performers could learn a piece of music without ever hearing it. This was an important breakthrough for both documentation of music and performance, but it also made a singular parameter of sound, that of pitch, the most important element, the musical substance, with all other elements being determined attributes (Kelly 2014, Lang 2016). This contributes heavily to how we have thought about and how we have written music in western society for several centuries, and even presently, this notational system and expansions of it are still used. The concept of pitch as substance also translated well to musical instruments developed in the following years, which were primarily pitch-based, and therefore a very consistent paradigm of notation and performance could be relied upon.

Electroacoustic music has served a very liberating role, both to the determination of substance, as well as the means of musical expression used. Other parameters of sound, such as timbre and space, can now be used as compositional elements. However, as both of these qualities are multidimensional, they are not as easily represented by notational symbols on a page. This has resulted in a variety of attempts to create unified notation systems and even systems of classification for these parameters. The notation of spatialization, for example, has been a major research focus in the last several years, especially as spatialization systems become more elaborate (Schacher et al 2014, Ellberger 2014).

It is also much harder to notate for electronic instrumental performance because many of the instruments do not have consistent and reliable expected behaviour. We can predict the behaviour of acoustic instruments because they are constrained by physical parameters of sound; however, without some kind of electronic extension, a string instrument, for example, can only make those sounds that are enabled by its physical components vibrating. This is not the case in computer music systems, or in acoustic music in which sounds are pre-recorded and modified. A computer music system can behave any way that a programmer tells it to, which prevents a (completely) universal paradigm from being established for composition or documentation.

Additionally, more electroacoustic works are beginning to draw from “the expanded field”, incorporating things such as visuals into a work (Ciciliani 2016). I have, for example composed works in the past which use the performance aesthetic as a compositional parameter (Aska 2015, 2016).

Therefore, even as we still have not established a unified means of expressing electroacoustic music, the material from which we can draw on continues to expand. It is simply changing too fast for scholars and composers to keep up with. The other issue is the 3-dimensional nature of parameters such as space, and timbre (and multi-dimensional elements present in the expanded field).

These parameters can't be represented on paper, but multimedia can be implemented; score reproduction was performed on paper because that was the resource they had available, there are now other options, such as video, and even interactive tools. Such tools have been extremely useful in other art forms such as video games, which also do not have a predetermined universal functionality. There is always a common input across consoles, the controller, but in each game a different function is performed by all of the buttons. A player has to re-learn this for every different game, but yet there are in-game tutorials, and a written book. This could Therefore prevent a valid starting point for gesturally-controlled music, for example.

The lack of unified notation results in two primary concerns: the sustainability and future performance of EA works, and the lack of a commonly understood notational system for electronic music. This lack of unified notation system for electroacoustic works has led to a certain compositional effect; most works are performed by the composer, and most primary documentation systems end up consisting of a video of the performance. Performers aren't usually trained to perform other people's electronic works, and even diffusion tends to be done by the composer. This affects the style of the works considerably, as performers are often writing for themselves and therefore, will rely upon gestures, techniques, and procedures that they are accustomed to.

This places electroacoustic music in the very same realm as pre-Guidonian era western music: there were several types of rudimentary notation systems that could generally represent the music, but noting unified, and a very strong lack in systems of communication that enable reproduction of works. The desire to sing works without ever having heard them before was the impetus behind development of Guidonian notation. We face very different challenges in communication, however. While it is often a goal of acoustic music composers to notate the music in such a way that the performers can read and perform it without needing to ask questions of the composer, technology enables us easy and instant communication. I can send a score from Canada to Japan in seconds, and if the performer (in Japan) had questions, they could not only write me an instant email or message, but could call me with video over the internet. This does change considerably the meaning and need behind notated music. However, I argue that the notation, or lack thereof, changes how we compose and create music. Therefore, there are elements beyond simply conveying a work for reproduction that contribute to the need for notation.

Notation of acoustic music led ultimately from a very general representation of pitch and loose trajectory to very specifically notating every parameter. We could therefore view the notation of electroacoustic music to be in a similar phase as late Medieval music. The question continues, of whether a unified system is even necessary, as we can see that the guidonian system had creative consequences regarding what was important musically, and this continued to affect music for centuries (and still somewhat today). It is also important to consider that the challenges faced previously, including lack of digital storage of pieces and access to recordings do not exist, and we have different media available today. Therefore, we may need to re-examine the idea of what is the best way to communicate electroacoustic music, so that it can be understood more universally.

- Alyssa Aska

Alyssa is a composer, researcher, and educator who writes both acoustic and electroacoustic works. Her current research explores the aesthetics of musical works which contain electronics, and concerns itself with the way in which new such compositional applications can be integrated into works in a musically meaningful way. She has done extensive work with translating gesture into sound, as a member of the UBC SUBCLASS and as an individual composer-performer. The results of this work has been presented at several conferences, including ICMC, NIME, SMC, and EMS. Alyssa received her B.Sc. in Music Technology from the University of Oregon under the supervision of Dr. Jeffrey Stolet and Dr. Robert Kyr and studied an M.Mus in Composition from the University of British Columbia with Dr. Keith Hamel and Dr. Robert Pritchard. Alyssa is currently a Ph.D. candidate of Dr. David Eagle at the University of Calgary.

BLACKBURN, Andrew

The representation of the electronics in a musique-mixte environment: analysing some ontological and semiotic solutions for performance

There are many communication and ‘reception of idea’ (Landy, L. (2007). Understanding the Art of Sound Organization. Cambridge, MA: The MIT Press) occurrences which are fundamental in the process of developing musique-mixte (for instrument with electronics) works. In practice, the creative process requires a nexus between composer-creator and performer(s) operating across both the acoustic, and electroacoustic realms. Having a notation system that is commonly understood and accepted notation and even language – a common semiotic ontology – for the electronic component of a musique-mixte work, as comparably ubiquitous as the stave and stick or gestural notation within the instrumental paradigm, would assist the composition development process.

Presently, there is no systematised, universally accepted form of electronic notation with which to record performance details in a form that can easily be shared between performers. Whilst there are excellent softwares which can assist analysing music/performance after the event (e.g. EAnalysis or Sonic Visualiser), composers usually create individual ‘notation’ solutions to represent the electronics component in a musique-mixte work. These solutions are normally separately tailored to meet the needs of both the player’s performing score(the instrumental score) and the technologist’s score. The ontology of each solution is, initially, pertinent to the musical creation and its creator(s) and secondarily, to the performance environment.

Four musique-mixte works from the first years of this century provide exemplars for analysing the notation of the digital signal processing component of each work. The works under discussion are creations for pipe organ with live digital signal processing and, in each, the acoustic sound of the organ is the origin of all electronic sound emanations. The combination adds a layer of sonic complexity to the already rich sonic quality of the pipe organ, and the musical intention of the processing, and an analysis of how this is represented in the notation of each work is the core of this paper.

The consideration of the semiotic ontology in of each ‘system’ used to represent the digital signal processing, permit some conclusions to be drawn regarding the information which is required by each of the participants in the musical performance. With the exception of the work by Thurlow/Halford/Blackburn, the works are scored for an organist and technologist(s). Andrian Pertout’s composition also includes flutes. The electronic notation solutions in each work provide the instrumentalist with a representation of the electronic component of the work, which may have a currency beyond the exemplar works. The works under discussion are: Andrian Pertout (2007) Symmetrié Intégrante (2007) for organ, flutes and electronics Op 394, Lawrence Harvey/Andrew Blackburn Eight Panels for organ, live electronics and sound diffusion system, Steve Everett (2005)Vanitas, and Jeremy Thurlow/Daniel Halford/Andrew Blackburn (2015) Ceci N’est Pas Une Pipe.. Reference will also be made to Uijenhoet, R. (2003 rev. 2009). Dialogo sopra i due sistemi for organ and quadraphonic live electronics.The background of the composers is diverse - Australian, Chilean, American, Dutch, and UK. While two of these works (Eight Panels and Ceci N’est Pas Une Pipe) use Cycling74’s Max to create a software patch which serves in part as the ‘notation’ of the electronics score, the others each use different software/hardware combinations including Kyema and SuperCollider. The electronic notations in the organist’s scores are also equally variable, ranging from an indication of a ‘scene’ change to detailed gestural instructions for both technologists and organist. Every work provides quite specific ‘recipes’ for (re)creating the sound palette which the performer/technologist may follow in conjunction with the organist’s score.

These compositions each provide an opportunity to delve into issues that have been raised earlier (eg Morrison (2014) Graphical Music Representations: A Comparative Study Based on the Aural Analysis of Philippe Leroux’s M.É. EMS 2014 proceedings). While not intending to provide a solution to the lack of a common electronic notation (which will likely be evolutionary in development rather than imposed), the paper will identify how the issue has been approached in the selected compositions, noting both the commonalities and distinctions between each.

- Andrew Blackburn

Dr Andrew Blackburn is a Research Fellow at Universiti Pendidikan Sultan Idris (UPSI), Malaysia, after being appointed in 2011 as Senior Lecturer in Music and, in 2015 as Deputy Director of UPSI Education Research Laboratory. His involvement in research projects include higher education training and assessment, intercultural music, and leading research projects in organ performance - particularly pipe organ and live electronic processing of sound (DSP), new forms of music representation, and musical histories in Malaysia. Andrew’s doctoral thesis is - The Pipe Organ and Realtime Digital Signal Processing: A Performer’s Perspective. Andrew has continued working with his range of expertise derived from his earlier career in Australia–music education, music creation, keyboard performance, music technology, and choral conducting.

Andrew has performed widely as soloist and with orchestras and ensembles all over the world, including Australia, Malaysia, England, Sweden, Denmark, Germany, Hungary, Italy, and Spain.

terpod1@bigpond.net.au

BLACKBURN, Mannuella

Other people’s sounds: examples and implications of borrowed audio

The starting point for much electroacoustic music is the capture of audio from the sounding world around us. Recorded sound (field and studio recordings) provides the composer with pliable audio data, inspiration and impetus for the creation of new work. The content of these audio files varies widely to include sounds from musical instruments, inanimate objects, spoken languages and environmental landscapes. Composers working in the field of electroacoustic music and all its associated formats and subgenres (soundscape, live laptop improvisation, acousmatic and noise-based to name a few) are reliant on the presence of audio, whether it be from synthesized or recorded sources, in order to move forward with a new work. Sound’s fundamentality to the composition of electroacoustic music is clearly understood within this discourse, but what is less clear and defined are the finer details relating to external sound sourcing, especially when the composer looks beyond their own materials, to others and/or digital resources (eg. Sound archives, sound libraries and sound maps) for this starting point inspiration. On the surface, it can seem that by removing the sound recording stage of the process, the composer forfeits a direct connection with the physical source, along with memories of this sound-capturing act. On the other hand, for some, skipping this step is not even an option, especially for composers who pride themselves on their well-honed microphone techniques and noise-minimizing skills, since the recording of ones own sound may be viewed as the first stage of the compositional process in which a compositional imprint is firmly forged and found. A given composer may have a recording ‘style’ or pattern, and this approach to recording can seep into his/her choice of sound materials. Take the example of a soundscape artist who braves the wind and rain with their highly specialized and adapted recording equipment. Their techniques for shielding their microphone from direct gusts and torrential downpours provides a striking contrast to the composer who inserts lavalier microphones into a bottle of fizzy water to capture the liquid’s microscopic effervescence within the calm, acoustically dry recording studio. In short, a composer can choose and create what sounds they want to work with in order to achieve specific, personalised end results. Chris Watson’s recording expertise comes to mind in this instance with his skillful use of ‘super compact particle velocity microphones’ to capture minute, barely-there caterpillar sounds[3]. Sound recordings can be in some way a reflection of the composer’s personal aesthetic, demonstrating creative planning at a very early stage in the compositional process. Contrary to this, there are a number of instances where composers choose not to work with sounds they directly collected, some in fact never record their own sound, as found in the numerous cases of sampling or plundering. Composers who seek out existing pre-recorded sources juggle their own creative integrity with the often-requisite sense of homage or respect assumed in these situations. This paper chooses to take on this issue, searching for specific examples, circumstances and outcomes of sound borrowing. Issues of sound quality, personal preference and dealing with dated or cultural remnants all undoubtedly arise when examining viewpoints and musical outputs of composers using other people’s sounds.

The paper considers the aspect of originality and how this can be lost when other people’s sounds are sourced and used in a composition. Adrian Moore talks of achieving originality through sound recordings: “how to make your work original? …record your own… they are immediately personal to you and the playback of these sounds comes with the guarantee that ‘you were there’. It is immediately easier too, to be able to re-trigger the emotions felt as you recorded the [sounds].”[4] With this in mind, we can start to see personal attachments and connections with sounds that we might miss out on if we make use of other people’s material. Sound recordist, Antye Greie supports this viewpoint regarding her own field recordings “they [field recordings] were my memories and my property and that meant a lot to me, like a bass drum made out of the pop of my lips recorded in Belgrade, or the hi-hat sounds made of snow I was crushing… these sounds made the songs more meaningful to me.” [5]

This paper will challenge the notion of originality loss and instead searches for the potential benefits and upsides of using externally sources sound. The paper will present perspectives on the intricacies and nuances of borrowed sound through a collection of case studies where composers have looked to others for sound materials. These include:

- The ‘Prize Presque Rien’ competition (questionnaire responses from Daniel Blinkhorn and James Andean).

- Cormac Gould’s CoreCore (2105) demonstrating exclusive use of sound archive sources along with only freeware software used for the construction.

- Compositional responses to the The European Space Agency (ESA) Estrack 40th Anniversary Sound Contest.’ Nikos Stravopoulos’s work, Metakosmia (2015).

- Pete Stollery’s Three Cities Project’ (2013) and the sharing of sound recordings from foreign cities (Stollery, Kim and Whyte).

- Instruments INDIA composition project (2106-17) – three composers commissioned to work with the Instruments INDIA sound archive.

Examining case studies of sound borrowing aims to demonstrate the variety of perspectives and motivations composers have in selecting and integrating these materials into their own aesthetics. Seeking sounds from archives and libraries can be viewed as advantageous in the accessibility this affords the composer, however adopting these sounds also means adopting the quality issues the sound may carry with it.

Following the perspectives of composers and audiences for works that borrow culturally significant sound is a further perspective the paper will introduce through the author’s own experience in establishing the Instruments INDIA composition project, which commissioned three composers (Steven Naylor, Greg Dixon and Ish Sherawat, 2017) to work exclusively with a sound archive of Indian musical instrument recordings. Introducing composers to foreign and unfamiliar sound sources had educational and creative impact upon the composer’s compositional processes and their preconceptions of instrument sounds and capabilities.

- Manuella Blackburn

Manuella Blackburn is an electroacoustic music composer who specializes in acousmatic music creation. She has also composed for instruments and electronics, laptop ensemble improvisations, and music for dance. She studied Music at The University of Manchester (England, UK), followed by a Masters in Electroacoustic Composition. She became a member of Manchester Theatre in Sound (MANTIS) in 2006 and completed a PhD at The University of Manchester with Ricardo Climent in 2010. Manuella Blackburn has worked in residence in the studios of EMPAC (Experimental Media and Performing Art Centre, New York) Miso Music (Lisbon, Portugal), EMS (Stockholm, Sweden), Atlantic Centre for the Arts (Florida, USA), and Kunitachi College of Music (Tokyo, Japan). Her music has been performed at concerts, festivals, conferences and gallery exhibitions in Argentina, Belgium, Brazil, Canada, Chile, Costa Rica, Cuba, France, Germany, Italy, Japan, Korea, Mexico, Portugal, Spain, Sweden, and the USA. She is currently Senior Lecturer in Music at Liverpool Hope University.

BOSSIS, Bruno

Electroacoustics as transcultural dialog

in Jonathan Harvey’s music and thought

On one hand, beyond the obvious qualities of the music of instruments or voices, technology gives access to another level of expression. Albeit the power of timber in instrumental music and the strength of words in vocal music, the infinite possibilities of simulation and treatment procure stronger and subtler possibilities at the same time. In the history of the last seven decades – analog then digital electronic technologies – have been used in different musical styles in a wide range of writing techniques, esthetics and goals.

On the other hand, since Le Désert by Félicien David and even before, European composers have been fascinated by the Orient, its cultures, arts, myths, religions and philosophical trends. From Debussy to Murail or Mâche, a lot of occidental composers have understood the unbelievable resources and richness of a multicultural approach. In addition, Tōru Kakemitsu, Yoshihisa Taira and plenty of other Asian composers show us more than a simple interest for occidental culture. All of the greatest composers aim at a sort of hybridization or fusion of oriental and occidental cultures, without any tendency toward self denial or impoverishment due to a worldwide standardization.

My purpose is to demonstrate how impressive technologies are to facilitate and deepen the communication between different cultural areas. Multiple examples can be found in the domain of art and especially in musical composition. However, I’ll concentrate my investigation on a composer who considered the powerful possibilities of technologies in order to improve the subtlety of expression. In fact, far from spectacular effects, computers are able to give the right tools to explore delicate frontiers in the art of composing. I already wrote about the efficiency of technologies to deliver refined and sophisticated emotions but this time, I’ll explore the role of electronics concerning the intercultural relationships inside composition.

The English composer Jonathan Harvey, born in 1939 and dead in 2012, was particularly interested in both domains: electronics and the Orient through Buddhism. Early in his career he discovered – thanks to Milton Babbitt at Princeton university – the ability of computers to comply with all sorts of compositional and expressive ideas. One of the great qualities of Babbitt was his excellent knowledge of extended serialism. He taught his students the means of controlling all sorts of variables in the composing process. Or to put it on a higher and more accurate level: Babbitt was one of the best authorities on structuralism. Thanks to him, Harvey combined in Timepoints (1970), the power of computational processes with the concepts resulting from structuralism. This was before Harvey’s real interest in the elevation of mind through art and music.

Then Harvey developed a deeper and deeper curiosity for oriental cultures and especially Buddhism philosophy. Born and raised in England, his family cultural background was clearly linked to Christianity and more specifically to the Church of England. However, before long, he enlarged his cultural interests, as many other artists did, toward other cultural areas. Fascinated by both the freedom and the intensity provided by Buddhism – a philosophy of life more than a real religion in the occidental meaning – he tried to understand this different world. His goal was to assimilate the two different approaches in his art. Reading, practicing meditation, even traveling to observe and dialogue with monks, he built his own spiritual world.

From the 80’ to his death, Harvey’s music has used the strength of electronics as a tool to express this enlarged “spirituality”, as he put it. His pieces that were composed at Ircam prove how the supposed coldness of technologies can actually be useful in creating subtle expression. I will take precise examples from this period to demonstrate through short bits of formal analysis how technologies are more than just a means to enhance trivial sound effects or a resource to better control different formal variables. In my presentation, I’ll base my arguments on Ritual Melodies, Mortuos Plango, Vivos Voco, the String Quartet #4, Speakings and other pieces. Not all the pieces are related to the Orient, but the means used in his general research are there. My book on Harvey, which is currently going through the editing process, will also show this triple duality (dvaita) transformed into unity (advaita): technologies/poetic creation, control/refinement, Christianity/Buddhism, and the Orient/Occident. His main idea, which he wrote about, was to musically intertwine various cultures and their different ways of dealing with the mysteries of life and death.

- Bruno Bossis

Bruno Bossis is a Professor in musicology, analysis and computer music, director of the Musique laboratory and permanent researcher of the research team Arts : Pratiques et poétiques, EA 3208 at the University of Rennes 2 (France). He wrote the book La voix et la machine, la vocalité artificielle dans la musique contemporaine. Editor of several books, he is the author of numerous articles on contemporary music, analysis and electroacoustic music. Currently, he is working on a book on Jonathan Harvey (Symétrie).

CHEN, Hui-Mei

The cross-use of electroacoustic music and traditional funeral ritual music of Taiwan in a dance performance The End of the rainbow

Performed by the “Mauvais Chausson Dance Theatre” group from 09 to 11 December 2016 at Song-Shan Cultural and Creative Park, Taipei, the dance performance named The End of the rainbow(《彩虹的盡頭》)took its inspiration from one of the popular funeral performing art arrays in Taiwan - 牽亡歌陣 (Qian-Wang-Ge-Zhen, according to the local believe in Taiwan, is a ceremony leads the death soul to pass through the chthonian path and the netherworld gates with gods’ blessings and protections, for arriving at the nirvana with Buddah). Doing a research of the Qian- Wang-Ge- Zhen during several months through field investigation in the south area of Taiwan, the choreographer and fours dancers learn with a master of this art and participate themselves as performers of ceremony for family of deceased. Although the dancing movement inspired from Qian-Wang-Ge-Zhen, the music based also directly on the recording of the singing of the master, yet the main part of the dance performance used mixed electroacoustic music. According to the music designer for this performance, while she received the indication of the choreographer to insert electroacoustic music into such a performance, she felt so confused. Yet from a point of view of an audience, the result of this cross-use may not disturb each other, on the contrary, I think this use interesting.

Actually, in Taiwan, one can find from decades the insertion of digital technology and visual art during the ceremony of the popular folkloric ritual that calls out to divine spirits. Although inspired from the funeral ceremony of folk’s belief in Taiwan, yet the choreographer as well as the dancers, not just reproduce the body movment of folk rituals, but develope it as the basic motive of the body vocabulary into the performance. The End of the rainbow transformed the folk rituals into a new and original artistic performing art, and provides a different experience to the participants. For the excellent performance, The End of the rainbow is renowned by the Taishin Art Reward 2016.

This paper aims to present the cross-use of electroacoustic music and traditional funeral ritual music Qian-Wang-Ge-Zhen of Taiwan in the dance performance The End of the rainbow, and try to discuss the socio-cultural ramifications of electroacoustic music through the performance.

- Hui-Mei Chen

Hui-Mei CHEN began her professional career at the age of twenty as flutist of the National Symphony Orchestra of Taiwan and acquired her official teaching post just about the same time. After her study in CNR de Paris, she assumed an even more active role in Taiwan’s musical scene, as a pedagogue as well as a performer. She obtained her DEA degree at the University of Paris IV/Sorbonne and the IRCAM during 1996-1997, which launched her new career as an academic researcher. In her third venture to Paris, she obtained her Ph. D. degree with highest honours in Music and Musicology of Twentieth Century at the University of Paris IV/Sorbonne on 2007 with her dissertation on the Japanese composer Yoshihisa Taïra (1937-2005). Since then she was engaged as a very active researcher and invited to participate to conferences in Taiwan as in foreign countries. After teaching at several universities in Taipei, since August 2017, she begins a full-time assistant professor position at Shih Chien University in Taipei.

CHEN, Yi-Shin / CHEN, Kuan-Ting / LIU, Hsien-Chi Toby / SARAVIA, Elvis

Automatic Beatmaps Generation for Electroacoustic Songs in Rhythm Games: an Audio Data-driven Approach

Introduction

The demise of art music, such as classical music and most recently jazz music, as most famously argued by Neil (2002), has to do with the inability of composers to incorporate popular culture elements and demands. Neil further establish disputable evidence that our newly discovered interest for electroacoustic music is due to the rhythmic complexity and sound dynamics that this type of music offers. The composition of electroacoustic music through the incorporation of new aesthetic approaches, makes such music more favorable and pleasurable as compared to music without rhythmic structure. There is no denying that the artistic nature through which electroacoustic music is composed makes it an interesting source of inspiration for music-based applications. Therefore, rather than understanding the social reasons for the demise of classical music and its decrease in audiences, we combine modern techniques—electroacoustic composition and gamification—to help revive interest in traditional and classical type of music. In other words, we envision multimedia applications, with human-gestural interaction, that can influence users, regardless of music taste, to sympathize with least popular musical styles.

This study aims to disseminate the concept of gamification applied to electroacoustic music as a viable alternative to increase outreach and engagement of falling-out-of-favor music among different cultures. That being said, we shall strictly emphasize on the technical aspect of the proposed gamified tool, which holds as basis, a composition of several popular electroacoustic pieces. Gamified tools that incorporate electroacoustic music, more widely known as rhythm games in this space, are found to bring numerous benefits, ranging from improved mental well-being to physiological improvement (Cevasco et al. 2005). Another distinctive benefit, and one which we investigate thoroughly, deals with the increase of engagement and appreciation towards classical and other least popular music. More formally, we hypothesize that higher engagement and retention time with our rhythm game will increase user likeability and appreciation towards traditional and least popular music, such as classical music.

Our main contributions as it corresponds to the aims of this project are as follows:

the implementation of an audio data-driven approach to automatically generate beats that are powered by electroacoustic music. More importantly, through this approach we aim to address the music-genre limitation problem present in other related rhythm games.

Measure the effectiveness of rhythm games powered by electroacoustic music with the ultimate goal to attract users to explore traditional and unconventional music genres. In this respect, we envision the wide-spread use of multimedia applications to revive interest in classical music and other music that has, over the years, inexplicably lost popularity.

As a long term goal, we plan to capture and analyze users’ real-time game-playing patterns to conduct extensive studies on the effects of electroacoustic music in the context of culture and other human aspects such as language, perception, and emotion. Moreover, with these complex datasets and multi-featured, multimedia tools, we can now begin to answer fundamental research questions about the cultural benefits and effects of electroacoustic music and the art of it.

Rhythm game powered by electroacoustic music

Rhythm games have long become mainstream in the interactive games space. In the standard rhythm game, players tap onto hints of beatmaps to score points as electroacoustic songs play in the background. Through tapping these hints, players are consciously or subconsciously interacting with audio and music features like drum beats, chords, tempo, or even melodies. With such interactive features, previous studies have shown that rhythm games, besides improving individuals’ cognitive performance, can also be applied in pedagogical scenarios (Abramson (1997); Overy (2008)). Thus, these games come with purposes beyond entertainment. Most rhythm games are confined to fixed hand-crafted beatmaps and a limited range of electroacoustic songs. These limitations result in players easily disengaging with musical games as they find no excitement in the monotonous stages, hence thedesignated missions or intended purposes of the games are easily hindered. Rhythm games built around electroacoustic music inherit the intrinsic and artistic nature through which this type of music is composed. As with many other successful interactive games, our assumption is that the unexpectedness and dynamicity of beatmaps in our rhythm game needs to be enhanced in order for players to experience positive emotion while playing it. For instance, the rhythm game must effectively embed the element of surprise, which is crucial to boost the stickiness of interactive games, regardless of genre.

In this study, we propose an audio data-driven approach that can automatically generate beatmaps for rhythm games. To keep players intrigued, our generative beatmaps should possess a few characteristics. First, we have to generate hints in generative beatmaps so that they are perfectly aligned with rhythm points – this increases the chance for users to experience reward sensations. In order to retain rhythm points, percussions in electroacoustic songs are transformed into note patterns. Longest common subsequences (LCS) is then utilized to extract patterns that appear frequently in songs as described by Bergroth et al. (2000). In this way, beatmaps can be composed by significant patterns of rhythm points derived from the song. Second, we have to ensure that our generative beatmaps are encouraging players by offering sufficient excitement in them. Composed of a repetition method and metrical complexity proposed by Toussaint et al. (2002), DifficultyScore evaluates complexity and intensity of generated hints. For this purpose, DifficultyScore is introduced to measure the goodness of our beatmaps. Based on the score, players can then choose what kind of difficulty levels they prefer to challenge. Finally, with such technique, automative beatmaps can now be generated based on any variety of electroacoustic songs, which indicates that the number of songs offered in rhythm games would no longer have a genre limitation problem. Moreover, any song can now be adopted as part of stages in the rhythm games. Playability and stickiness of rhythm games are also expected to boost with sufficient generative beatmaps.

Preliminary Conclusions

To avoid bias of any sort, several participants with different cultural backgrounds and gender are invited to participate in the experiment. We then validate our results and test our hypothesis of whether our rhythm game, composed by several electroacoustic pieces, increases player engagement on music with diversified genre, more specifically least popular music. Preliminary experimental results reveal that, despite the participants' preference towards particular musical styles, their listening times increased significantly as they engaged with the rhythm game, regardless of the songs that played in the background. In comparison to listening to the raw songs, where disengagement is observed to occur frequently, participants willingly spend more time on interacting with unpredictable beatmaps that were powered by the rhythm game. Overall, the proposed automatic beatmap generator is beneficial to playability and robust stickiness of rhythm games. This in turn leads to a rise in user engagement towards least preferred musical styles, which would otherwise remain unexplored. It is important to point out that one of the main challenges with this study is the difficultness to assess or validate whether our rhythm game increases likeability towards particular music genres over time. In other words, immediate engagement is easier to measure as compared user preferences over long periods of time.

In conclusion, the use of electroacoustic music in rhythm games presents an opportunity to revive interest in classical and other least popular music. The ability of our rhythm game to support unlimited music genres sets the stage for future studies with multi-capabilities, and the implementation of diverse music applications. For instance, with the proposed technology any type of music can now be adopted and used to automatically generate beatmaps, which is potentially useful to conduct studies that consider understudied musical styles. Besides presenting empirical evidence on the usefulness of electroacoustic music via rhythm games, our goal with this study is to discuss the importance of electroacoustic music in the revival of art music itself. This implies that we need to investigate more deeply the underlying factors and effects of electroacoustic music in rhythm games to actually begin reaping societal benefits from it.

References

• Abramson, R. M. (1997). Rhythm games for perception & cognition. Alfred Music Publishing.

• Bergroth, L., Hakonen, H., & Raita, T. (2000). A survey of longest common subsequence algorithms. In String Processing and Information Retrieval, 2000. SPIRE 2000. Proceedings. Seventh International Symposium on (pp. 39-48). IEEE.

• Cevasco, A. M., Roy Kennedy, M., & Natalie Ruth Generally, M. (2005). Comparison of Movement-to-Music, Rhythm Activities, and Competitive Games on Depression, Stress, Anxiety, and Anger of Females in Substance Abuse Rehabilitation. Journal of Music Therapy, XLII (1), 64–80.

• Neill, B. (2002). Pleasure Beats: Rhythm and the Aesthetics of Current Electronic Music. Leonardo, 12, 3–6.

• Overy, K. (2008). Classroom rhythm games for literacy support. Music and dyslexia: A positive approach, 26-44.

• Toussaint, G. T. (2002). A mathematical analysis of African, Brazilian, and Cuban clave rhythms. In Proceedings of BRIDGES: Mathematical Connections in Art, Music and Science (pp. 157-168).

- Yi-Shin Chen

Professor Chen joins National Tsing Hua University (NTHU) at 2004. She has served eleven years as the director of the International Master Program of Information Systems and Applications, which is one of the two international master programs in NTHU. Professor Chen is a passionate educator and researcher, and throughout her career, 60 of students have graduated, including 16 international students and 10 students without an IT background. Two received Ph.D. degrees and 58 received master degrees. All Professor Chen’s students received one-on-one, personal advise each week, even during her three maternity-leave periods.

Professor Chen is passionate about increasing society’s benefits through her research efforts. Due to her awareness of the declining audience in classical music (through her 10-year professional training to be a pianist), Professor Chen’s research investigates multimedia applications to attract potential audience. Due to the fear of a media monopoly, she has focused her research efforts on Web intelligence and integration. Her goal is to create a social media interface that explores and visualizes the Web data easily.

yishin@gmail.com

CORRAL, Jeremy

On the difficulty to consider as a continuity the production

of the NHK electronic studio

It is known that production at the NHK electronic music studio does not exhibit any theoretical or formal unity obvious enough that we could easily resort to synchronous methods of analysis with a systematic nature, which would allow its essentialization: numerous composers worked there, none of whom stood out – or rather sought to stand out – as the figurehead around which to establish a common direction and precise features. Therefore the play of opposites used to describe production of musique concrète at Club d'essai / GRMC in France and the electronic music of NWDR in Germany cannot be applied to grasp what is created at the NHK as well – or in a more limited fashion. Beyond an analysis of the aesthetics of the pieces which we could constitute – to put it simply – around specific technological, technical and stylistic characteristics, it thus appears necessary to highlight the shared particularities from the cultural context and the work environment in which they were being produced; in other words, the idea would be to extract the core properties on which depend all the ones previously mentioned.

To allow that, the development of a diachronic history of the studio's operation is an obvious tool to consider. Indeed, if the studio is where all the repertoire was created it seems natural that it would de facto represent – with high certainty for the researcher – a structural setting, a persistence of stable landmarks, encompassing and levelling the music pieces through the action of agents that we can believe to be irreducible. Yet, some archives tend to show that it cannot be considered self-evident. The purpose of this communication is to underline with a few examples backed by references how it is proving challenging to apprehend the production of the studio in terms of continuity.

First of all, the most essential question is to ask ourselves what we really mean by the NHK electronic music studio: that is, which structure, in space and time, are we referring to. Was the studio created in 1954 when Moroi Makoto (the first to write about Cologne electronic music and to present its principles the same year in an article published shortly before in the journal Ongaku geijutsu) joined a team of NHK technicians to perform sound experiments with existing material? Or was it in 1955 to promote the conception, by Mayuzumi Toshirō, of the first electronic music studies based on the analysis of texts from Robert Beyer and Herbert Eimert? Or even in 1956 with the creation, by Moroi and Mayuzumi, of the first original piece titled Shichi no variēshon? Would it be possible that what is known today as the electronic music studio was a construct posterior to 1956?

It turns out that all those possibilities are at the same time correct and insufficient. In fact, it seems that the first mention of the existence of a "studio" appears in some writings by Moroi published in 1957 in Ongaku geijutsu to highlight the work done on Shichi no variēshon. Yet apparently, activity reports from the NHK, presented through almanacs, only report a "laboratory" after 1964, that is when the Audiovisual division was moved to a new building located in a different part of Tōkyō - fact that an article in the Asahi Shinbun highlights with its tile: "An electronic music studio. For the first time possible at the NHK" –; however we now know, thanks to an autobiographical text by Shibata Minao published in 1995, that prior to what could already be considered a studio by the enterprising and ambitious composers had only been a set of machines first stored one after the other in a corridor of the building generally only accessible after the working hours of the recording and broadcasting studios, then in the observation room of the hall dedicated to symphonic concerts. Maybe as significant is the late but continuous mention, after 1961 as sections, in those almanacs, of the production of electronic music at the NHK: 1960 being the year when Ondine by Miyoshi Akira earned the first prize at the Italia competition organized by the Rai in Italy to reward the best television and radio programs, we can thereby think that this success initiated in fact the awareness of the value of a creative endeavour about which it is from then on necessary to communicate more.

Second of all, which pieces are really considered as coming from the studio? Numerous pieces produced for radio serial broadcasts, special programs, or for specific institutional use have a uncertain status and do not appear in all the inventories made over the years. Such a creation as Rittai hōsō no tame no myūjikku konkurēto for instance, produced by Shibata Minao in 1955, which casts no doubt on its origins (it is notably part of the compact disc anthology Oto no hajimari o motomete dedicated to the work of the NHK electronic music studio) is nevertheless only presented as a production of the studio after 1968, after appearing in the International electronic music catalog compiled by Hugh Davies. Lists did not refer to it before that and for a good reason: Shibata mentioned in 1995 that his piece, produced during the same period as Mayuzumi's studies, was conceived in a different recording studio to those; that studio, already established and operational, probably offered a broader stability and ease of work.

Those few facts – obviously far from comprehensive – illustrate how delicate a task it is to restrict the production of the studio within known markers, as it cannot be said that all production from the NHK taking advantage of electronic technologies or the technical capabilities of the tape recorder automatically becomes a piece from the electronic music studio. It is all the more perilous considering the fact that there is no official archives of the studio, and that the only available resort for the researcher to treat the data is to rely on scattered documents and discographies. In this way, to achieve simplicity and clarity, it is not surprising that we would need to rely on a rational and quickly workable classification as made available by the inventory produced a posteriori. Although convenient and allowing us a direct entry into the repertoire under scrutiny, this could however not suffice to extract the potential characteristics of the aesthetic identity of the production of the NHK electronic music studio; nor can it help determine the stakes of its positioning within the international production. Finally, it is therefore about managing those difficulties that a method yet to be defined should attempt to minimise.

- Jeremy Corral

Currently a doctorate student in Japanese Studies at Inalco in Paris and a researcher in Ōsaka University of Arts, I’m writing a thesis about the first years of activity of the NHK Electronic Music studio. My research interests include the history of contemporary music and experimental music in Japan, as well as Japanese cinema and media.

DI SANTO, Jean-Louis

“Six Japanese Gardens” by Kaija Saariaho:

eastern and western temporalities

“Six Japanese Gardens” (1994), before its existence, was fundamentally an intercultural work: its composer, Kaija Saariaho, is both from Finland and France, and this piece was commissioned by the Kinutachi College of Music of Tokyo. It also was written in memory of the japanese composer Toru Takemitsu. Does this work reflect this interculturality? To answer this question, I chose to look first for relations it is possible to find between sounds themselves and what can reflect a way of thinking or a symbolic part. Then can these two aspects be intercultural? It is easy to hear that an intercultural dimension lies in the very choice of instruments: their timbre sounds both occidental and oriental, with similar functions. Concerning the symbolic part, I will study the relation beetween formal analysis and philosophical analysis, using eastern and western philosophy. According to K. Saariaho herself, “Six Japansese Gardens is a collection of impressions of the gardens I saw in Kyoto during my stay in Japan in the summer of 1993 and my reflexion on rhythm at that time.”

The first movement, titled “Tenju-an Garden of Nanzen-ji temple”, can be considered as an electroacoustic piece because it contains an electronic part and because its instrumental part only consists in the pulse of different percussions used one after the other, creating variations of timbre. Since it is the first movement, it can be considered as an introduction that contains and exposes the purpose of all the work. The method to analyse this movement will be based on two steps.

1) Formal analysis

On a first step, I will make a formal description through a transcription made with the acousmoscribe, a system of signs describing sounds from a phenomenological point of vue, and that enables the analysis of the relationships between instruments and tape with the signs.. Tape and instruments are considered using reduced listening, and can be compared. More: the description of shape and matter of sounds enables to see stuctures that present some isomorphisms with structures of time. But it also enables a comparison between the electronic part and the instrumental part. At last, there is both an opposition and a link between the tonic timbre of the instruments and the inharmonic timbre of the tape.

This work is obviously a symbolic work and is speaking about time throught different kinds of rhythm. A strong opposition exists between the instrumental part and the electronic part. Actually, this work opposes two temporalities radically different: pulsed and oriented time with the instruments, and smooth and static time at the tape. The analysis of this work with the acousmoscribe allows both a formal and a symbolic analysis, and so it allows to study the semiosis, the way the plane of contents works with the plane of expression.

2) Symbolic analysis

If musicians can easily speak about rhythm from a formal point of vue, philosophers are more indicated to analyse concepts and to speak about temporalities with words: how is this piece speaking about time, and what is time? Can music be a way of thinking the world and can it have an hermeneutic function? I will try to answer these questions using time analysis by western philosophers, and more particularly Martin Heidegger and Jacques Derrida, and a japanese philosopher, Nishida Kitarô. The approach of K. Saariaho herself allows this comparison. She says: “Music is a pure art of time, and the musician – composer or not – builds and controls the experience of the flow of time. For music, time is material, and by this fact, to compose is to explore all the forms of time.” (Saarihao, 1997). “Tenju-an garden” exposes different kinds of time.

2.1 In this piece, there is an opposition between pure instant and duration of time, which is a problem very deeply studied by these philosophers. Of course, on a basic level, this opposition is

materialized par short percussive sounds and long files of sound flowing. But, on a more elaborated level, we can easily hear that percussive sounds are repeted and create duration, while long sounds maintains the listener in a perpetual instant. This opposition creates a dialectic studied by Kitarô: “It [time] must be considered as continuity of discontinuity.”

From a western point of vue, Derrida expressed the same idea in a different way: “The impossible co-maintenance of several present maintenants is possible as maintenance of several present maintenants.”

2.2 The superposition of instruments that appends sometimes and the interleaving and the recovering of sound files at the tape make each moment turned both to the past and to the future, what philosophers thinked. Kitarô wrote: “… we are touching the infinite past […] But at the same time [...], we are also confronted to what determine us from the infinite future...”

In a different way, Heidegger was speaking of “the ekstatic horizontality of time”, that is meaning that in each moment past, present and future coexist.

2.3 These oppositions co-exist with an other opposition: individual temporality and historic and social temporality. This dimension egally exists in Saariaho's work: the aspect very ritual of the instrumental part responds to the gregorian song we can hear in the electronic part. Even the sound correspondances between instruments and tape can be heard in this meaning. These two temporalities refer to time that exceed individual time, the time of the creation and the time with the others. These aspects are studied both by Kitarô and Heidegger.

2.4 The repetition of patterns (the association of smooth and granular timbre, for example) can be linked to what Heidegger called individual repetion: “In the being-toward, Dasein repeats itself in the most proper for-being by early. The proper Gewesene, we call it repetition.”

2.5 At last, according to them, time structures are linked to being. Kitarô says:

“The “you” as the absolute other that the “I” sees deep inside himself must be a “you” who, as an infinite past, determines the “I” in an internal way from its deep inside, i.e. a “you” who is past.” This opinion is very near from what Derrida called “differance”, the things that become different when they are differed in the time.

In other words, the consciousness of the other is directly linked to the perception of time. So “Six Japanese Gardens” can also be considered as a reflexion on human being.

Tenju-an Garden of Nanzen-ji Temple speaks about complex temporalities that can be analysed from an estern or a western point of vue. These points of vue very often are converging, and one can think that interculturality can be universality. The divergences can be thinked more as complementarity than as opposition. But music, with its own langage, can reflect our perception of the world and reach a certain universality.

- Jean-Louis Di Santo

Jean Louis Di Santo studied classical guitar and electroacoustic composition. He is the recipient of several awards in composition competitions and has played in several festivals. He is especially interested in the relations between sound and meaning. He has discovered the sound minimal unit (EMS06) and has created a notation for sounds based oh reduced listening called “acousmoscribe”. He has participated in many conferences in France and abroad.

ESCANDE, Marin

The tape music of Jikken Kôbô 実験工房 (Experimental Workshop): Characteristics and specificities in the 1950s

Active during the 1950s in Tokyo, Jikken Kôbô is a collective of fourteen young artists, both musicians and visual artists, working together on works such as experimental ballets, concerts/exhibitions or audiovisual productions. Major group of the renewal of the avant-‐garde after the war, it inaugurated original forms of performance based on the idea of artistic collaboration. Concerning its musical production, composers such as Takemitsu Tôru 武満徹 (1930- 1996), Suzuki Hiroyoshi 鈴木博義 (1931- 2006) or Yuasa Jôji 湯浅譲二 (1929- ) early experienced potentialities of tape music and became pioneers of it in Japan, alongside other composers like Mayuzumi Toshirô 黛敏郎 (1929- 1997) or Akutagawa Yasushi 芥川也寸志 (1925- 1989). Contemporaries of the first tape music experiments in Europe, they developed their own way of apprehending this new medium, from an intermedia perspective.

In fact, in their explorations of new interactions between medias, the members of Experimental Workshop have been interested in technologies of sound and visual reproduction. In 1953, they created works for an automatic slide projector (ôtosuraido オートスライド). It was an educational purpose device, developed by the company Tokyo Tsûshin Kôgyô 東京通信工業 (TKK), later renamed Sony, that made possible to synchronize a slide projector with a sound tape. Subsequently, the group continued its audiovisual experiments with Mobile and Vitrine (1954), which is the first film in Japan using electronic music, and GinRin 銀輪 (silver wheel) (1955) made under the supervision of filmmaker Matsumoto Toshio 松本俊夫 (1932- ). Some composers of Jikken Kôbô also created radio dramas in collaboration with the Nihon Hôsô Kyôkai (NHK) 日本放送協会 (japan broadcasting company) which opens its experimental sound studio in 1955. For instance, Honô 炎 (flame) by Takemitsu Torû was diffused in November 1955 and will become later the raw material of the piece Relief statique ルリェフ・スタティク (Static Relief). The tape music of the group is also used as accompaniment for stage performances such as ballets or even for art exhibitions.

The outcome of all these experiments in the 1950s will take the form of a concert in 1956 at the Yamaha Hall, jointly with two composers of the group Sannin no kai 三人の会 (Society of three) – Mayuzumi Toshirô, Akutagawa Yasushi – and Shibata Minao 柴田南雄 (1916- 1996). Entitled Musique concrète/electronic music audition, it marked a turning point in the development of tape music in Japan and outlined the different directions taken by Japanese composers concerning this medium – between a French influence (musique concrète) and a German influence (constructivist electronic music).

Nevertheless, apart from the Mayuzumi’s trip to Paris in 1952 during which he attended two concerts of musique concrète, contacts with Europe were almost nonexistent in the first half of the 1950s. We can argue that tape music in Japan has grown quite in a self-sufficient way at that time. Composers of the Experimental Workshop had only scattered echoes of experiments made in France and developed their own way of apprehending this medium. First of all, we notice that, unlike Pierre Schaeffer with musique concrète, they had no will to theorize or even conceptualize this new music. This is linked to the very nature of Jikken Kôbô, which has almost never sought to explicate their artistic activities by writing, evidenced by the lack of a manifesto. Even if they used the same processes of sound transformation – as reverse lectures, slowing or accelerating effects, etc. – they did not share the schaefferian thought of « objet sonore ».

Indeed, the tape music of Jikken Kôbô has developed from an intermedia perspective, in a relationship to images and some forms of narration. It differs from Schaeffer’s concepts because it does not necessarily avoid the anecdotal aspect of recording sounds. While Schaeffer wanted to abstract the sound in order to replace it in a musical context, composers like Takemitsu readily used sounds of nature or human voices in a dramaturgic perspective more linked to radio dramas. It was certainly more suitable for works such as autoslides and musics for films or stage performances.

Furthermore, other composers of Jikken Kôbô like Yuasa Jôji, Suzuki Hiroyoshi and Fukushima Kazuo 福島和夫 (1930- ) had worked with recordings of instrumental pieces they have composed previously and altered them by manipulating the tape as described above. Akutagawa has already done a piece using recording of instrumental music: Maikurofon no tame no fantajî マイクロフォンのためのファンタジー (Fantasy for microphone), broadcast on NHK in 1952. Considered as the first tape music experiment in Japan, it was a superposition of two orchestra recordings with shifting effects. Works like the music of the autoslide Resupyûgu レスピューグ by Yuasa Jôji, which consist of a reverse lecture of a piece for piano and flute, was certainly influenced by Akutagawa’s achievements.

My presentation will therefore propose to put into perspective the experiments on tape music undertaken by Jikken Kôbô with the ones of the same time in Europe. The aim will be to identify its specificities with regards to musique concrète, radio drama or even the electronic music of the Cologne studio. For this, I will contextualize the emergence of this music in Japan after the war and try to categorize and explain the different conceptual approaches of tape music inside the group. I will analyse these pieces in their relations with other artistic mediums in order to highlight how their intermedia purpose has influenced their conceptions.

- Marin Escande

Born in 1992, Marin Escande is a second year PhD student in musicology at Sorbonne University in Paris. His current research concerns the japanese avant-‐garde group Jikken Kôbô. His main interests are japanese contemporary music, new forms of interdisciplinarity and relations between art and society. Since October 2016, he is a scholarship student researcher in Tokyo University of the Arts. In parallel of his activity as a musicologist, he is also a composer student in instrumental and electronic music.

FUJII, Koichi

Musique concrète of Minao Shibata